We Challenged HubSpot's Predictive Lead Scoring And Lost To The Robots

![I Challenged HubSpot's Predictive Lead Scoring And Lost To The Robots [DATA]](https://www.nectafy.com/hubfs/Imported_Blog_Media/NECT-BLOG-hubspotAI-1024x629.jpg)

Lead scoring is crucial because it separates the wheat from the chaff, so to speak. By scoring the leads that come in through your website, your sales team can spend time interacting only with the leads who are interested in your business and who meet your criteria for being a good potential customers.

Here’s the HubSpot explanation:

Lead scoring lets you assign a value (a certain number of points) to each lead based on the professional information they've given you and how they've engaged with your website and brand across the internet. It helps sales and marketing teams prioritize leads and increase efficiency.

If you have enterprise-level HubSpot (or a similar tool) that can do lead scoring in some capacity, you’ve probably put a lot of work into building lead scoring models that make sense to you. We have—and we wanted to test that lead scoring model Henry created against HubSpot’s predictive model to see how it compared.

Case Study: Creating Our Own Lead Scoring Vs. Using HubSpot’s Predictive Lead Scoring

You can do lead scoring manually in HubSpot, or you can use HubSpot’s own predictive lead scoring in HubSpot (AI that tries to score leads for you). We did both.

Henry built a lead scoring model for one of our clients, who had about 1200 leads coming through their website per month. The goal (likely that of any lead scoring model) was to determine this: If a salesperson had time to spend pursuing just one lead, which lead could he or she pick to maximize the likelihood of turning that lead into a customer? Lead scoring is able to stack rank leads based on information we already have on the lead, and present that score to the sales team.

How did we go about finding the right information to score?

In Hubspot, and in any marketing automation platform, you have lead attributes. These are pieces of information you collect about each lead like first form submitted, country based on IP address, and number of page views on the site. If a lead has viewed 50+ pages instead of 5, you may think that lead is warmer. And you may be right! But there’s actually a way to prove that out statistically.

How We Built Our Own Model:

We analyzed the 15,0000 most recent leads for the client and looked at a number of lead attributes to find correlations. We were searching for attributes that noticeably turned into sales opportunities at a better or worse rate than you’d expect.

These are the four attributes we found that that had these trends, so we used them to build the scoring model.

- Source: Which online channel – like organic search or paid ads – did the lead come from?

-

- Examples: Leads from organic search turned into sales opportunities 32% more than the average of the whole data set. Referrals turned into opportunities 79% less than you’d expect.

- First page seen: What is the first web page on the site that the lead landed on?

-

- Examples: Leads that visited the homepage first did 32% better than average. Leads that visited the affiliate landing page did 92% worse.

- Yearly processing volume: This is a custom lead attribute for this company: the amount of sales revenue processed online per year.

-

- Examples: Leads that said they had $0 - $60,000 in annual processing volume did 42% worse than average. Those with $60,000 - $250,000 did 74% better.

- IP Country: The country the lead is in based on their IP address.

-

- Examples: Leads from Israel performed 507% better than expected, whereas leads from France performed 37% worse.

Results

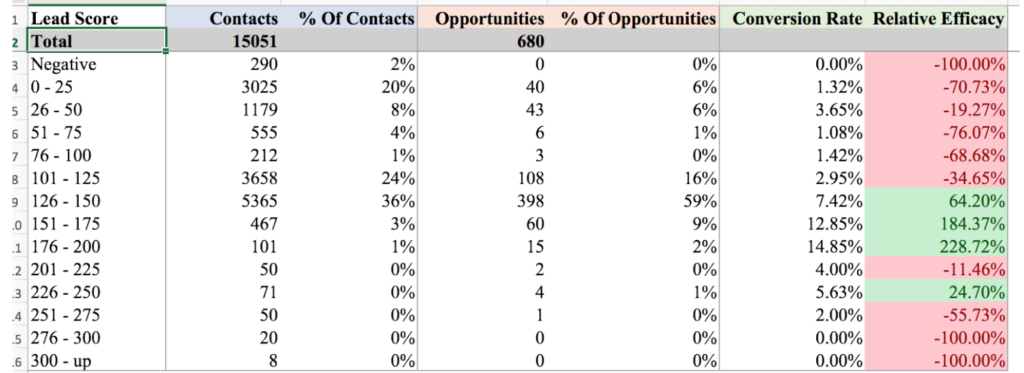

Our Model

If a new lead came in, we would automatically look at the four factors above and allocate points based on the factors. We then scored out all of those attributes to see how the model was actually performing.

In the chart above, as you move in the far left column from a negative lead score to a lead score of 300 and up, the chart in the far right column should move from red (-100% efficacy) to green (100%+) linearly—that movement would mean the lead scoring was working perfectly. Leads with a higher score would all be turning into opportunities at a higher rate than the bracket lower than them. As you can see in the graph, that’s not the case. At the higher numbers especially, the model falls apart.

Henry’s Model Vs. HubSpot’s Model

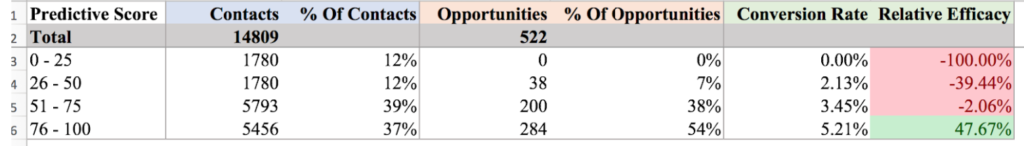

In the chart below you can see the results from using HubSpot’s predictive lead scoring. This tool automatically calculates a score from 0-100 based on a model that HubSpot’s AI builds.

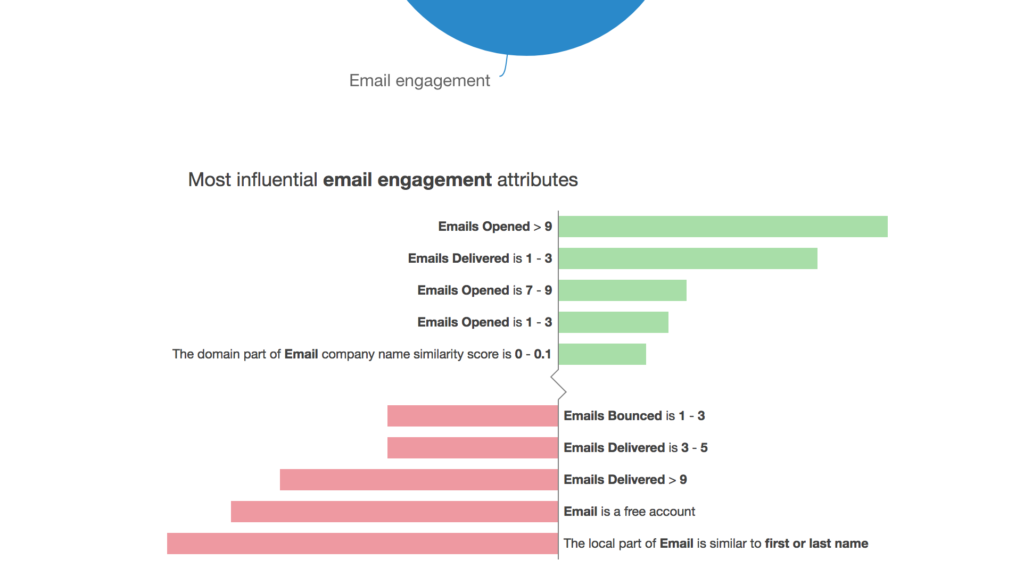

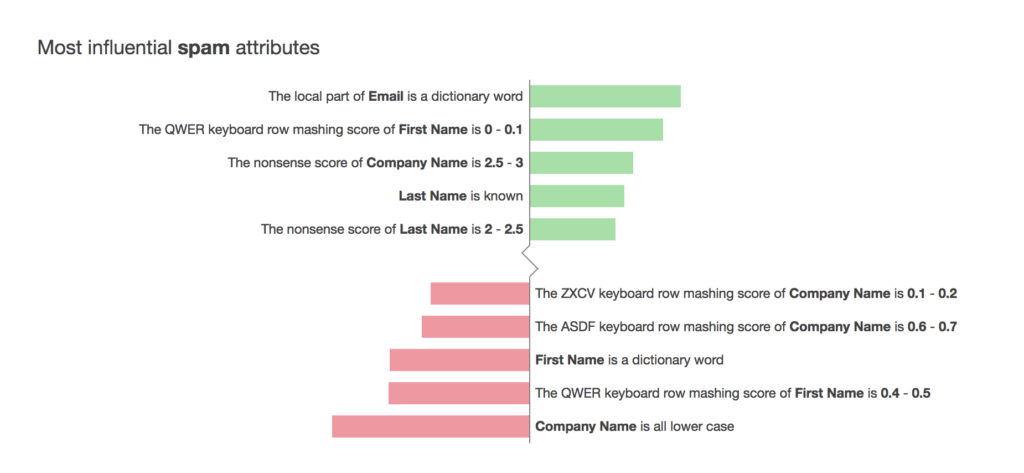

HubSpot automatically looked at ten attributes in each of six categories (email engagement, social engagement, spam, demographic information, behavior, and company information) to determine their scoring. The images below explain how some of these attributes were assigned points.

As you can see in the chart, HubSpot’s score was perfectly linear and beat Henry’s custom built scoring system. All leads with predictive scores of 0 - 75 turn into opportunities less frequently than you'd expect, and all leads with a predictive score of 76 - 100 turn into opportunities more frequently than you expect.

Takeaways

HubSpot’s predictive lead scoring works, and we recommend incorporating this tool into your lead scoring system. If you have HubSpot Enterprise, put more trust in it than you think you should. Set it up and have the predictive scoring model do the work for you—Henry spent a ton of time doing this on his own, but he still didn’t do as good of a job as the HubSpot robots did.

There are two ways to go from here:

- If you have manual scoring set up, add in predictive scoring as another factor.

For example: In your manual lead scoring screen, you can “Add a new set” to give zero points to a predictive lead score between 0-25, and give 100 points to a predictive lead score between 75-100. By doing this, you’re introducing the value of predictive lead scoring into your manual lead scoring as a piece of the puzzle, not the entire puzzle.

- If you don’t have either but are thinking about doing lead scoring, use predictive right away. Don’t waste time trying to build out your own lead scoring model.

Trust the robots.

In this case, the robots won. You should consider using predictive lead scoring in HubSpot—it will save you a ton of time and do a better job than what you’re building out yourself. It isn’t always the case, but it does sometimes make more sense to go with a product that’s already been designed for you.

Download Now: How Our Company Put HubSpot To The Test

Want to see what our first two years on HubSpot were like? We've shared the numbers, results, and how we use HubSpot. It's in depth (100 pages to be exact, sorry).